| TPCx-AI |

TPCx-AI is an end to end AI benchmark standard developed by the TPC. |

|

|

|

The benchmark measures the performance of an end-to-end machine learning or data science platform. The benchmark development has focused on emulating the behavior of representative industry AI solutions that are relevant in current production datacenters and cloud environments.

The standard addresses the needs of:

|

|

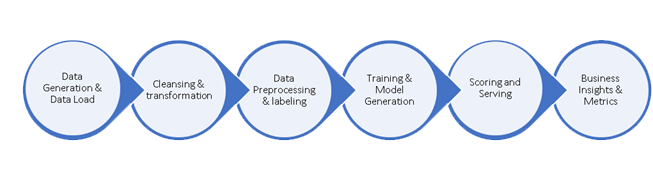

The benchmark standard scales a diverse representative dataset up to Terabytes. The data management stages, and data science pipeline modeled by the benchmark exhibit the following characteristics:

|

|

The price performance metric and maintenance requirements for each result can be easily used to compare the viability of the commercially available solutions by any vendor.

A Use Case defines a single problem solved by the Deep Learning and Machine learning Data Science Pipeline. It is framework and syntax agnostic and can be implemented in many ways. In the TPCx-AI kit, almost all of the use cases implemented include data generation, data management, training, scoring and serving phases.

|

|

|

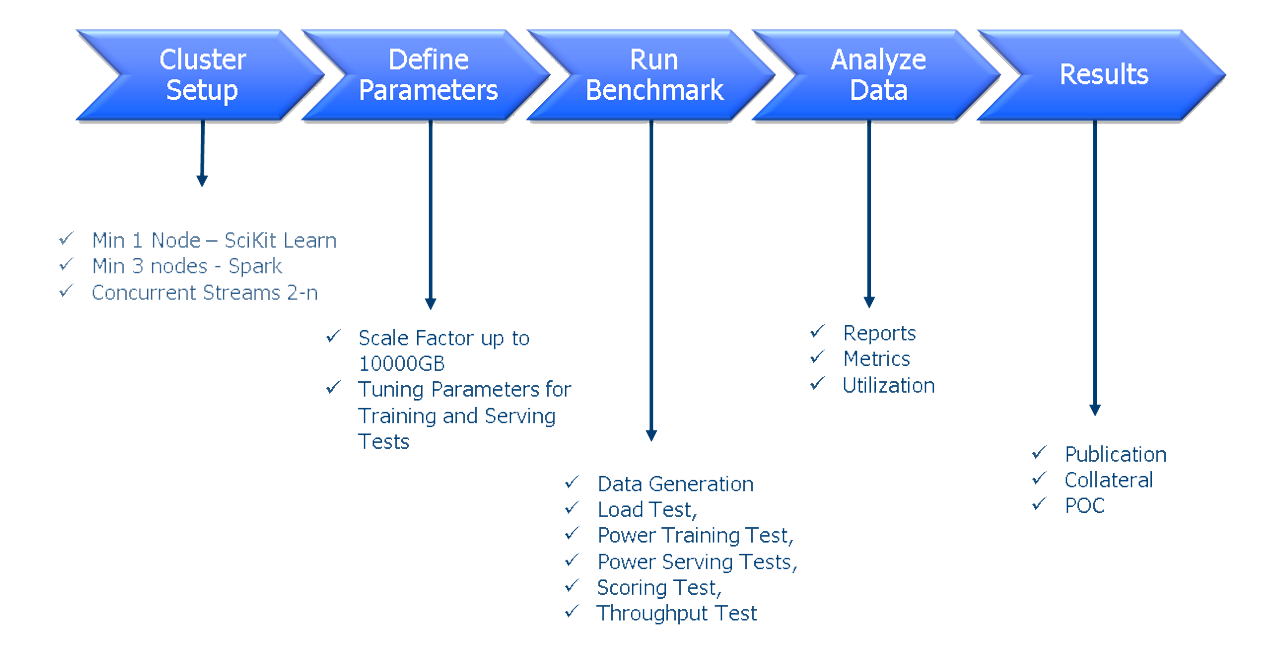

TPCx-AI Benchmark Workflow makes it easy to run the benchmark in a standard single server configuration or a cluster (scale out) configuration based on the chosen implementation. Once initially setup, the user can specify additional parameters that can be tuned as specified by the TPCx-AI specification that can improve the performance of the solution. The Benchmark Test Run consists of a set of tests that can then be run and the data and results can be analyzed or reported.

|

|

All TPCx-AI results are audited to certify compliance with the spirit and letter of the TPCx-AI Benchmark Standard by an Independent Certified TPC Auditor or a TPCx-AI Pre-Publication Board. As a consequence, the TPCx-AI results are verified, documented and published.

|

|

Specification

|

|

More Information (here)

|